I was recently asked to write the VR/AR section of a 3D printing review paper. As part of my section, I presented a literature review of the current research that’s been investigating the use of virtual and augmented reality to view medical models. One of the growing uses of these modern technologies is as an alternative to physically 3D printing models for surgical planning. The benefit that virtual or augmented reality might bring to this type of visualization is likely significantly affected by the form-factor and technical sophistication of the technology being used. To my dismay, many papers were using VR and AR as catch-all terms that covered anything from the Microsoft Hololens (AR) to a conventional desktop display being used to look at 3D renderings of models (VR?). It occured to me that in the medical field (and in general), we lack some clear and distinguishable language that teases apart what makes all of these technologies different from each other.

What is it, essentially, that makes an iPad running Apple’s ARKit different than the Magic Leap AR headset? How does the Oculus Go VR headset differ from the Valve Index? In this post, I’m going to present a number of ways to create understandable delineations between these products, ranging from historical concepts to some new frameworks that I’ve come up with.

Two types of distinction

There are two different ways to draw lines between different VR and AR technologies. The first is by describing the technical distinctions between the actual devices. Examples of these kinds of things might be the technology behind the flat-panel display found in a VR headset (LCD vs OLED panels, pixel resolution, etc.) or the kind of tracking technology (inside-out computer vision tracking, accelerometers, etc.). There’s some benefit to discussing these nitty gritty details and I’ll go over some of the broad strokes, but they don’t provide a clear understanding of how the experience of a certain technology is different. The second type of distinction is in describing the capabilities of a certain product to provide a simulation experience, because that’s the goal of these technologies: creating engaging simulations of virtual elements and environments

Technical distinctions: the basics of VR and the two kinds of AR

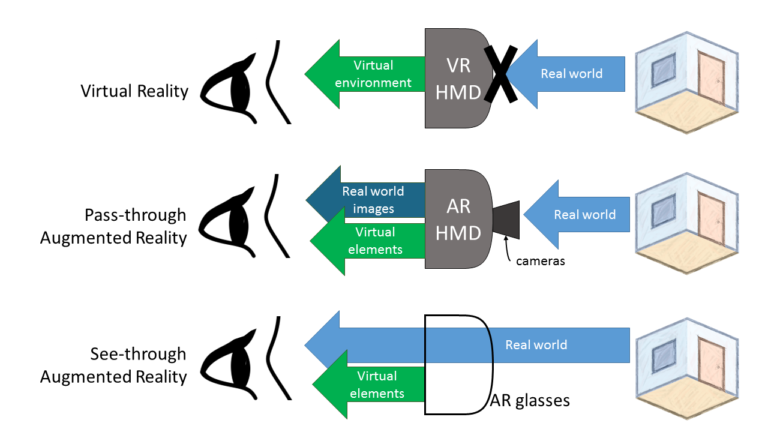

If you’re familiar with VR/AR technology and want to jump to the new concepts you can skip past part. These days, virtual reality is technologically achieved almost exclusively by a head-mounted display (HMD) that cuts off the user from the real-world environment and provides images of a virtual environment through the combination of lightweight, high resolution screens and lenses. In the past, virtual reality simulation was also sometimes achieved through the use of something called a CAVE which was essentially a large room with projectors or large screens presenting the virtual environment on the walls around you. It should be pretty clear why the new era of consumer-available VR is HMD based.

Augmented reality, at least the immersive kind (more on that in a bit) is generally achieved in two different ways. One way – called pass-through augmented reality – is by putting one or two cameras on the front of a virtual reality HMD, and then overlaying real world video footage on top of the virtual scene presented by the HMD. The real world is not viewed directly. The second kind is called see-through augmented reality and is achieved by viewing the real world directly through clear goggles or glasses while virtual elements are displayed on the clear glasses.

Distinction by experience

Historical broad strokes

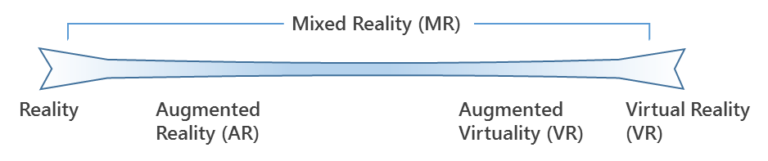

The reality-virtuality continuum is very useful for providing some language for the experiences enabled by mixed reality. But in the modern technological environment, we probably need to separate things further.

The 3 main characteristics of the simulation experience

There are 3 main characteristics that can be used to distinguish pretty much any VR/AR technological implementation. They are:

Virtuality

Virtuality is simply the reality-virtuality continuum restated and describes the proportion of real-world versus virtual elements being visualized by the user. Low “virtuality” means the user sees mostly the real world and high “virtuality” means the user sees mostly virtual elements.

Perspective generalization

Perspective generalization describes the ability to visualize virtual elements from any given user’s perspective. That is, a fully generalized perspective allows for virtual elements that exist in a persistent 3D space such that a user can move around the element and see it from different perspectives. A fully fixed perspective means that virtual elements are locked in position and orientation to the user’s own perspective.

World cognizance

World cognizance describes the ability of a device to account for real-world structures and corresponding ability of virtual elements to interact with these structures as if they inhabited a shared physical space.

It’s worth noting that each of these characteristics is made possible by an enabling technology: virtuality is enabled by visualization technology, perspective generalization is enabled by tracking technology, and world cognizance is enabled by structure-sensing technology.

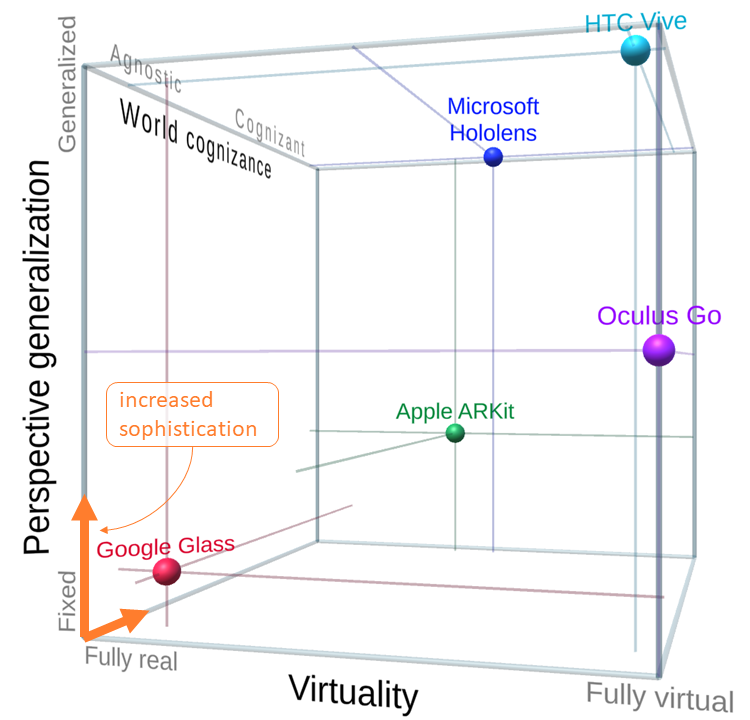

With these characteristics in hand, we can look at different products out there and understand and describe the differences between the experience they enable. You can see a 3D plot below that has each characteristic as an axis with a few recent technological examples.

Google Glass, a head-mounted device (HMD) that displays user-interface elements in the corner of the field of view for one eye, is low in virtuality – showing mostly the real-world to the user, presents a completely fixed perspective (i.e. the virtual elements maintain a static position and orientation with respect to the user’s view), and has minimal world cognizance achieved by GPS location tracking only. The Oculus Go is a mobile VR HMD that tracks rotations of the user’s head with inertial measurement units (IMUs). It has full virtuality, providing only an immersive virtual environment, a semi-general perspective since the perspective of the virtual scene can be changed by head rotations but not by head translation, and is entirely world agnostic. The HTC Vive is a computer-connected VR HMD that has full positional and rotational tracking in 6 degrees-of-freedom (6DOF). It can optionally display the real world to the user through front-mounted cameras and so is slightly lower on the virtuality axis than the Oculus Go. The full 6DOF tracking allows for a fully generalized perspective, and the predefinition of safe movement boundaries that are presented to the user – should they move close to them – adds minimal world cognizance to the user’s experience. Tablets or mobile phones using Apple’s ARKit to create AR experiences are in the middle range of virtuality – mixing real and virtual images on the device screen, they provide a semi-generalized perspective in that virtual elements can exist in a persistent 3D space but the perspective is that of the device’s camera, not the user’s eyes, and more importantly, does not provide a stereoscopic view of virtual elements which greatly limits the perception of depth. By sensing surrounding structures, ARKit simulations provide high world cognizance. The Microsoft HoloLens, a mobile AR HMD that provides a moderate field of view on which virtual elements are super imposed on the real world, holds a similar position in the continuum to ARKit. However, its position differs by having a fully generalized perspective wherein elements exist in persistent 3D space for the stereoscopic perspective of the user’s eyes.

An increase in perspective generalization and an increase in world cognizance represents an increase in sophistication in the resulting simulation, whereas the amount of “virtuality” being used is generally a design choice. So where would a desktop monitor displaying 3D renderings of models fit? It has no perspective generalization and no world cognizance. And given that it does not provide an immersive virtual visualization or a mix of real and virtual elements, it pretty clearly should not be considered any flavour of mixed reality, VR, or AR.

Summary

It’s useful to understand all the new technologies that are out there under the umbrella of mixed reality. One way to do that is by discussing the technical differences between product offerings. However, by understanding the three main characteristics that distinguish the experience enabled by these technologies, we can better understand and describe the fundamental distinctions between products out there. Those characteristics are virtuality (the amount of real-world vs. virtual elements visualized), perspective generalization (the ability of a simulation to create objects the persist in 3D space independent of a given person’s perspective) and world cognizance (the ability of virtual elements to interact with real-world structures).